[ad_1]

Meta has announced new safety features for Teen Accounts on Instagram, including a new option to block and report users directly from their private messages (DMs).

The newly added safety features are designed to give teen users on Instagram more context about the accounts they are messaging and help them spot potential scammers, the social media giant said in a blog post published on Wednesday, July 23.

A Teen Account on Instagram has enhanced privacy and parental controls. Accounts created by any user under the age of 18 are categorised as Teen Accounts and their profiles are private by default.

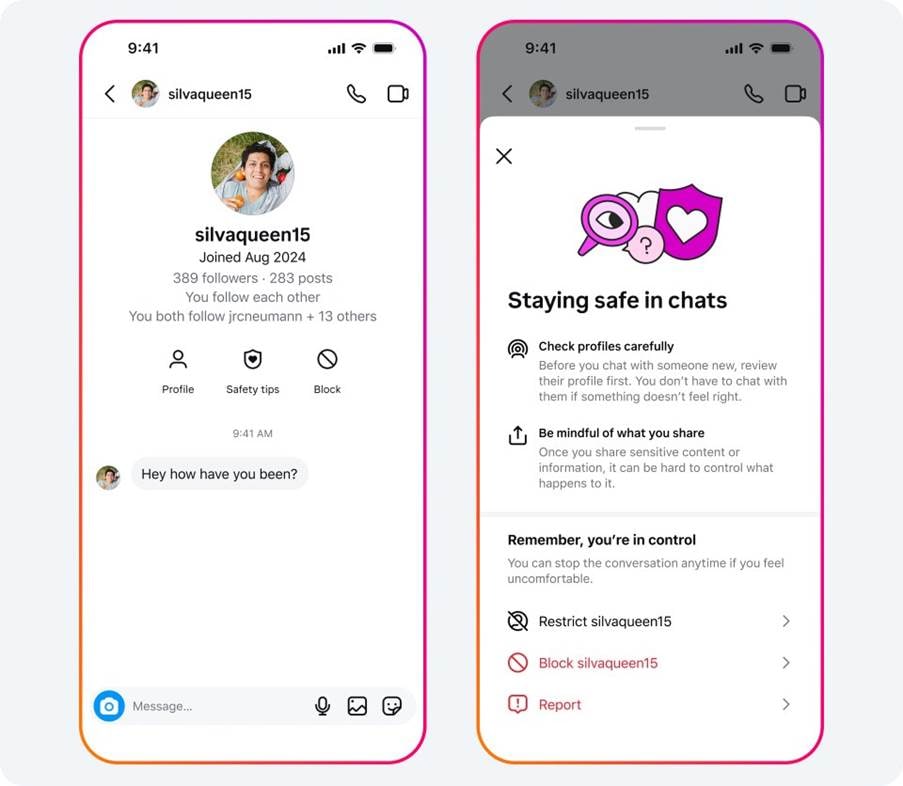

Meta said that it will be showing Teen Account holders on Instagram new options to view safety tips and block an account. “They will also be able to see the month and year the account joined Instagram, prominently displayed at the top of new chats,” the company said.

New options to view safety tips. (Image: Meta)

New options to view safety tips. (Image: Meta)

Last year, Teen Accounts was expanded to other Meta-owned platforms such as Facebook and Messenger.

Meta on Tuesday also announced that it is extending some Teen Account protections to adult-managed accounts that primarily feature children. The minimum age to sign up for Instagram is 13 years old. However, the platform also allows under-13 users on the platform as long as their accounts are managed by adults and the account bio mentions the same.

Now, such adult-managed accounts primarily featuring children will automatically be placed into Instagram’s strictest message settings, with Hidden Words enabled, to prevent unwanted messages and offensive comments.

Story continues below this ad

“We’ll show these accounts a notification at the top of their Instagram Feed, letting them know we’ve updated their safety settings, and prompting them to review their account privacy settings too,” Meta said. The adult-run accounts of under-13 users will also not appear in recommendations in order to prevent “potentially suspicious adults” from “finding these accounts in the first place.”

These changes are expected to be rolled out on Instagram in the coming months.

Key figures

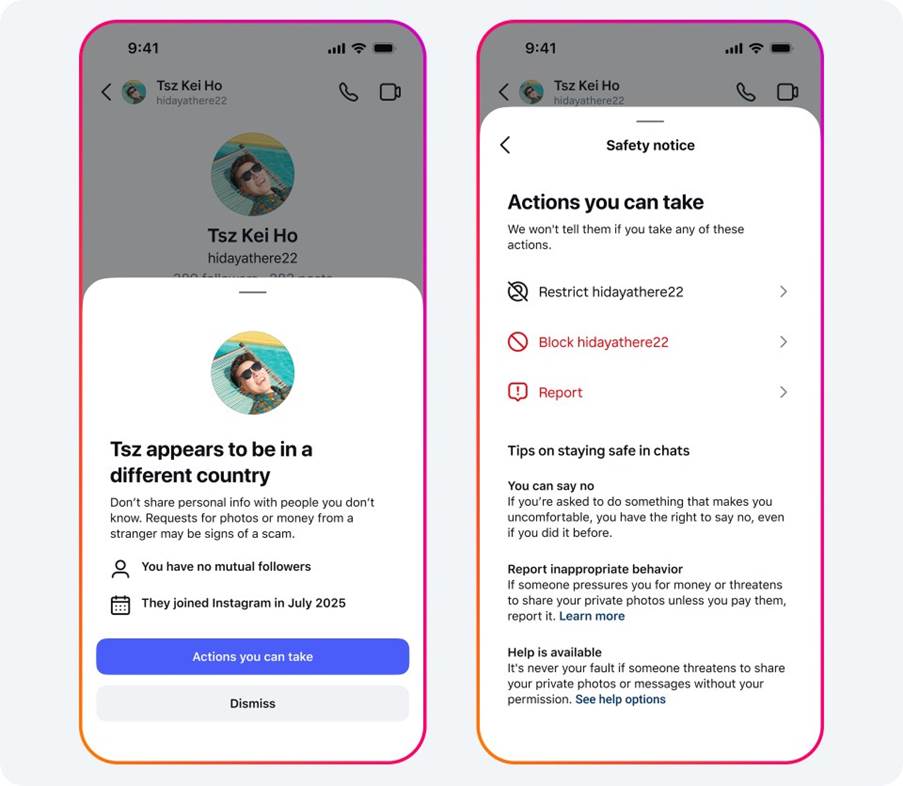

Meta further shared new data on the impact of its teen safety features. Over one million Instagram accounts were reported by under-18 users in June this year. Instagram’s new Location Notice that lets users know when they are chatting with someone in another country was viewed one million times but only 10 per cent of users clicked on the notice to learn more about the steps they could take.

Instagram’s new Location Notice. (Image: Meta)

Instagram’s new Location Notice. (Image: Meta)

Nearly 1,35,000 Instagram accounts were taken down by the platform for leaving sexualised comments or requesting sexual images from adult-managed accounts featuring children under 13. “We also removed an additional 500,000 Facebook and Instagram accounts that were linked to those original accounts,” the company said.

© IE Online Media Services Pvt Ltd

[ad_2]

Source link