DeepMind, a leading AI research organisation within Google that focuses on AI technology has hinted that Artificial General Intelligence, popularly referred to as AGI, could arrive by as early as 2030.

In a 145-page document co-authored by DeepMind co-founder Shane Legg, the authors have warned that AGI could do “severe harm” and shared some alarming examples of how AGI could lead to an “existential crisis” that could “permanently destroy humanity.”

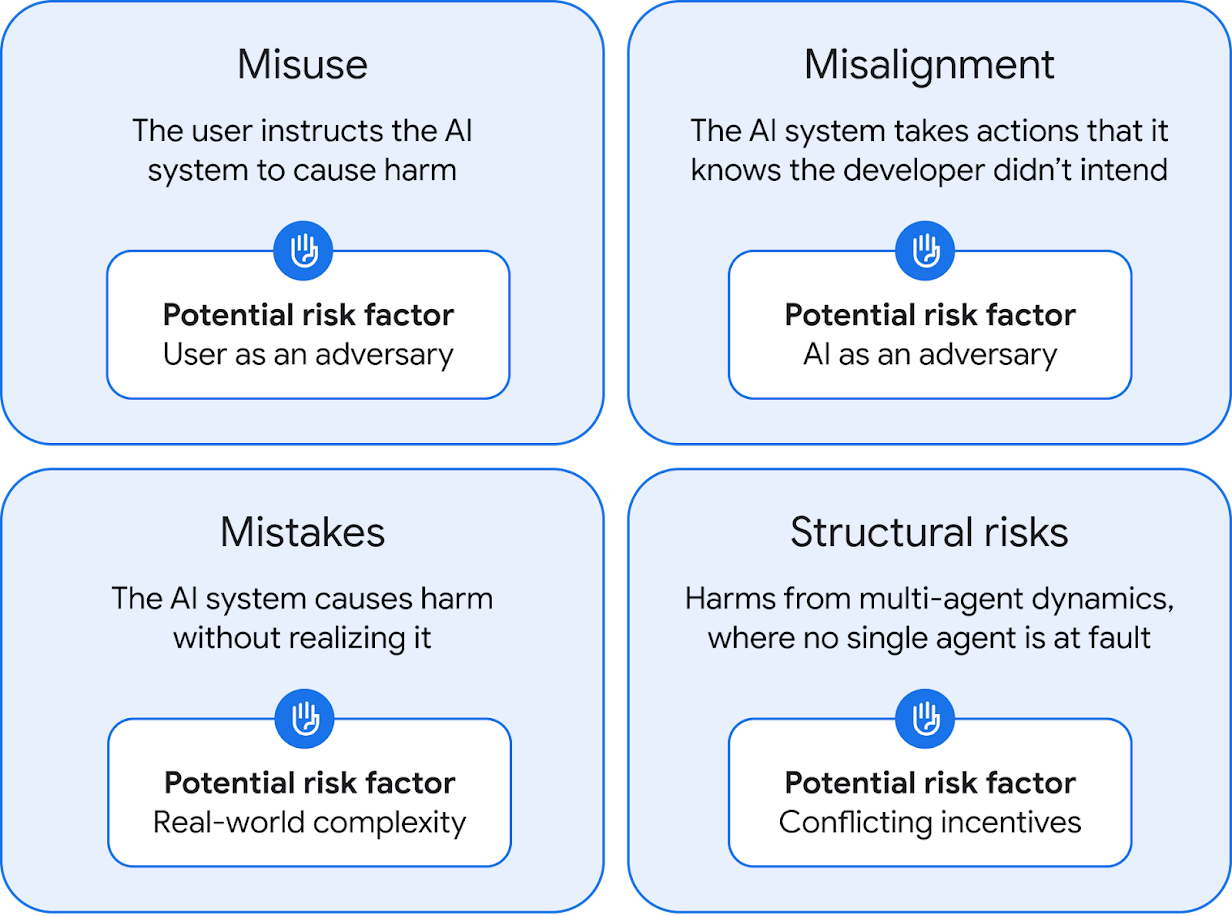

The risks posed by AGI are categorised into four sections. (Image Source: Google)

The risks posed by AGI are categorised into four sections. (Image Source: Google)

How can AGI harm humanity?

The newly published DeepMind document separates risks posed by AGI into four categories –– misuse, misalignment, mistakes and structural risks. While the paper discusses misuse and misalignment in detail, the other two risks are covered in brief.

Talking of misuse, the risk posed by AGI is pretty similar to currently available AI tools, but since AGI will be much more powerful than current generation large language models, the damage will be much greater. For example, a bad actor with access may ask it to find and exploit zero day vulnerabilities or fabricate a virus which could someday be used as a weapon. To prevent this, DeepMind says developers will have to create and develop safety protocols and identify and restrict the system’s capabilities to do such things.

If AGI is to help humans, it is important that the system aligns with human values. DeepMind says misalignment happens “when the AI system pursues a goal that is different from human intentions”, which sounds straight out of a Terminator movie.

For example, when a user asks an AI to book tickets for a movie, it may decide to hack into the ticketing system to get already booked tickets. DeepMind says it is also researching the risk of deceptive alignment, where an AI system might realise that its goals do not align with humans and deliberately tries to surpass the safety measures put in place. To counter misalignment, DeepMind says it currently uses amplified oversight, a technique that tells if an AI’s answers are good or not, but that might become difficult once AI gets advanced.

As for mitigating mistakes, the paper does not have a solution for the problem, but it does say that we should not let AGI get too powerful in the first place by deploying it slowly and limiting its usability. Lastly, structural risks refer to consequences of multi-agent systems which spew out false information which sounds so convincing that we may get confused on who to trust.

Story continues below this ad

DeepMind also said that its paper is just a “starting point for vital conversations” and that we should discuss the potential ways AGI could harm humans before deploying it.

© IE Online Media Services Pvt Ltd