Inside a fully packed conference centre in Las Vegas, Amazon Web Services (AWS) CEO Matt Garman’s message was simple and strategic: agents are not a flashy consumer gimmick; they are the next utility that enterprise workflows will depend on, and AWS intends to sell them with enterprise-grade guardrails through governance, regionalised control, and cost predictability.

A year earlier, Mr. Garman had laid out a practical, infrastructure-first roadmap for bringing generative AI into enterprise production. In that keynote—his first as AWS’s chief—he emphasised investments in custom chips, expanded instance families, and tightened integration between model-building tools, like SageMaker, and model-consumption platforms, like Bedrock, as companies sought to move from experiments to sustained, cost-efficient production.

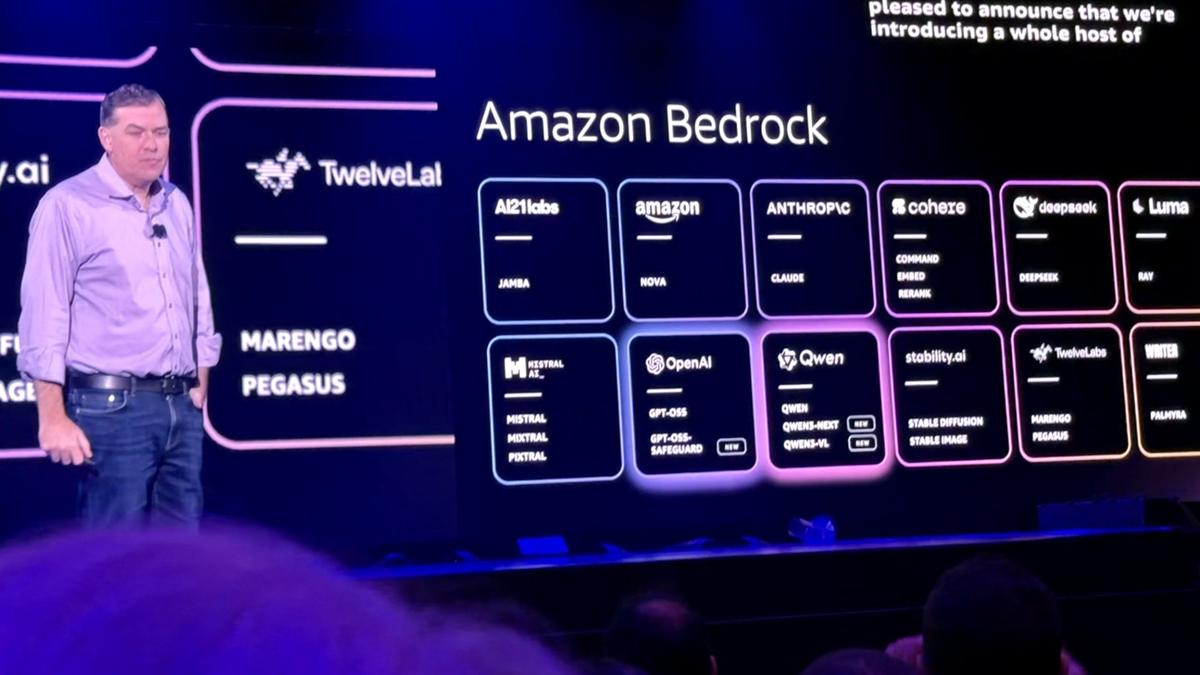

At re:Invent 2025, Mr. Garman echoed that infrastructure-first thesis and sharpened it around “agentic” capabilities. He showed how a combination of custom silicon, new foundation models, managed model-training platforms, and orchestration tooling can be composed into agents that automate multistep business workflows. The announcements this year emphasised an integrated path with Forge and other developer-facing platforms for custom model training, expanded Bedrock offerings for enterprises wanting vetted models with governance, and production-ready agent frameworks for tasks spanning code generation, security orchestration, and operational automation.

The long game

Google and Microsoft have both staked large, visible claims on agentic AI, but they play different hands. Google pairs Gemini, its family of models and agent tooling, with deep product integration across Search and Workspace; its strengths are model innovation, multimodal reasoning, and product-level ubiquity that can surface agents inside everyday apps.

Microsoft marries its extensive enterprise footprint across Office, Teams and Dynamics with its longstanding OpenAI partnership to create agent experiences embedded into productivity software and IT operations, leaning heavily on Copilot branding and the idea of agents as productivity extensions.

Both hyperscalers are moving faster in visible product experiences because they can immediately surface models inside mass-market applications used daily by millions.

AWS, by contrast, plays the long game. It sells reliability and the ability to scale agents into mission-critical back-office systems: not just a Copilot in Word. Where Google’s approach is to put agents into end-user surfaces and Microsoft’s is to weave agents into office productivity and developer tooling, AWS is betting that enterprises will pay for predictable, governable agent fleets that run where their regulated data lives and that operators can monitor and control.

A race in multiple lanes

While Google and Microsoft may win the battle for faster product stories, AWS’s narrative sells well to CIOs and platform teams. But the critical question is whether enterprise buyers reward production-readiness and governance more than the sleekness of the demo.

Early signs suggest there is a market for the “plumbing-first” argument, but this is a race with multiple lanes. Model capability, developer experience, data governance and commercial terms will all determine who wins where. AWS is wagering that enterprises will prefer agent fleets that are governed, observable and sited close to their data—in clouds offering granular controls, regional sovereignty and predictable economics.

Commercial success, however, is not guaranteed. Companies will weigh the friction of integrating agentic systems into complex workflows and the cost of running them at scale. A recent report by McKinsey warns that value capture requires redesigning workflows and investing in human skills; if those investments do not materialise, agent deployments may underdeliver. AWS must therefore translate its infrastructural advantage into an equally compelling developer and operator experience. If agent authoring and observability remain too complex, customers may prefer the turnkey convenience of Google or Microsoft products.

Organisations will also assess reputational risk. They will look not only for capability, but for transparent safety practices and independent verification. The winning hyperscaler will be the one that combines model performance with governance, observability and a credible story around energy and compliance.

Workflow-centric vs task-centric

For CIOs watching these three hyperscalers, the roadmap is becoming clearer. They should prioritise pilot programmes that are workflow-centric rather than task-centric, redesign processes, invest in complementary human skills and build governance before scaling.

They should identify which vendor’s trade-offs align with their organisation’s constraints: rapid embedding into user interfaces and productivity apps may favour Google or Microsoft, while strict data locality, complex system integration and long-term cost predictability may make AWS’s infrastructure-first playbook more attractive.

And they must treat agents as products requiring lifecycle management, with telemetry, retraining pipelines and human oversight as non-negotiable components.

Google, Microsoft and AWS are not pursuing identical plays, and that diversity matters. Each hyperscaler will win in different lanes. The more interesting question is whether any one of them can combine model-level excellence with enterprise-grade governance and operational simplicity.

AWS’s re:Invent narrative is designed to answer “yes” to that question, but much of the answer will be written not in keynote demos, but in enterprise service-level agreements, carrier-grade telemetry dashboards and the quiet spreadsheets that quantify cost of ownership over years rather than the applause on demo day.

The industry’s next phase will be judged by who can turn agentic promise into predictable outcomes.

Published – December 03, 2025 02:05 pm IST